In the modern content creation space, artificial intelligence has become a reliable tool for many. The modern technological landscape has increased its reliance on the work produced by AI in a massive way in 2023. Yet, with so many generational tools being developed, it becomes increasingly difficult to keep track. So, we explore one in particular and tell you how to train a stable diffusion model.

Since the meteoric rise of OpenAI’s ChatGPT, AI tools have been catapulted into the mainstream. Moreover, they have become vital parts of the modern workflow. Additionally, they are used by countless individuals in a host of fields. In the world of artificial intelligence-driven image generation, there may not be a more impressive tool than stable diffusion.

So, let’s explore a little bit about the technology, its uses, and how we can optimize it for the best results. Specifically, we’re going to be taking a deep dive into the algorithm as a whole and how to trail the model for the most optimal results on your projects.

What is Stable Diffusion?

Also Read: How to Install Dreambooth on Stable Diffusion?

As we’ve previously stated, the contemporary interest in artificial intelligence has never been higher. Moreover, the presence of AI-based tools has never been more prevalent. However, with so many options for these kinds of generative applications, they can become a bit overwhelming. So, we explore one of the more popular, Stable Diffusion.

Firstly, stable diffusion models are generative AI algorithms that utilize machine learning (MK) and historical data to predict an outcome or event. Specifically, they utilize what the industry terms the diffusion process. Moreover, it will add noise to an image and then slowly reduce the volume of that noise to deliver to the user one final image.

The ML technique that is the foundation of a diffusion model has proven to be far more effective in its results than a deep learning (DL) model. Subsequently, these algorithms are extremely smart and powerful, and they can handle more complex prompts and instructions. Therefore, users can be more precise in their descriptions than other programs would have allowed.

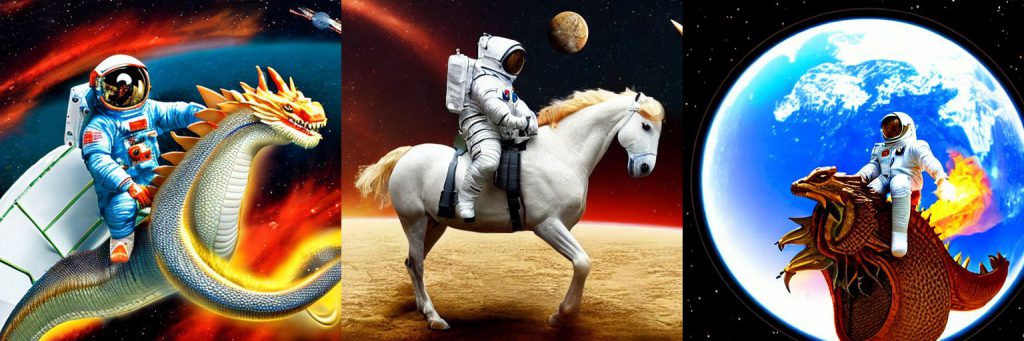

What stable diffusion has come to represent is the latest evolution of artificial intelligence image generation. Its use of the ML model allows specified input that results in a remarkable, high-quality image at the fingertip of the user. Moreover, the techniques that have brought it to life have developed a text-to-image program that is head-and-shoulders more impressive than those that came before it.

How Are They Trained?

Also Read: How to Make Stable Diffusion Faster

Now that we know a bit more about the image-generating algorithm itself, let’s explain a bit about how it was trained. This information is crucial in creating a basis of knowledge of the nuances within the training process for the model. Therefore, you will be better equipped to get the most out of the training that you implement.

Primarily, stable diffusion modes are trained through something known as adversarial training. Indeed, this is a method that utilizes two different models that are trained against each other. Specifically, these are called the generator model and the discriminator model. However, the two different models operate with very different functionalities and play different roles in the training process.

The generator model is trained to create the most realistic images that it can. Alternatively, the discriminator model is presented with images and tasked with selecting which one is real and which one has been generated by another AI system.

The process begins with the generator model, which is granted a random noise pattern. Then, the generator will use this pattern to generate an image. Then, the discriminator model will examine that image and determine if it is truly real or if it was a product of the generator model. The outcome of that selection impacts the next part of the process.

If the discriminator model correctly identifies a generated image, then the generator model is updated to create even more realistic images. If the discriminator model chooses the real image incorrectly, then it is updated to be better in its attempts to distinguish.

Data Preparation

Also Read: How to Install Python-SocketIO on Stable Diffusion?

Before the training of a stable diffusion model commences, you first have to prepare the necessary data. This process comes in a few different steps but is undeniably crucial to the process. Firstly, you would begin with data collection. Specifically, the gathering of up-to-date and relevant data for your intended outcome.

Then, you will carry on to the data-cleaning step in the process. Specifically, you will be tasked with cleaning up the dataset. This means that you will assess and eliminate outliers, missing data, or other inconsistencies for the sake of increased accuracy. Moreover, you will need to correct certain errors and possibly transform that data to be of better use to the model.

After that, you will need to start the preprocessing part of the data collection. Specifically, you will work to improve the accuracy of the model’s performance. This would entail things like normalization, standardization, and dimensionality reduction.

After you prepare the data, your next step would be to approach the design of the model. Subsequently, this is the first real step in the training beyond the preliminary data requirements.

Designing the Model

Also Read: How to View Prompt History in Stable Diffusion?

As previously stated, this next step is the process of designing the stable diffusion model. Indeed, this involves choosing what algorithm, parameters, or architecture are going to be used for the model. Additionally, it is important to remember some popular algorithms that are used in stable diffusion models.

These include deep convolutional neural networks (DCNN), generative adversarial networks (GAN), and variational autoencoders (VAE). Moreover, it is important to understand different factors, such as linear complexity, dataset size, and accuracy level, when selecting a specific model.

Training Stable Diffusion

Now it is time to begin training, and it is vital to know what tools and platforms you will be able to use for such a task. Indeed, Google Colab, Jupyter Notebooks, and TensorFlow are all viable options. The interactivity of the environment for running experiments and image generation is critical.

Now, there are a few steps that you will need to remember when training the stable diffusion model; let’s break them down here:

- Divide the Data: Firstly, you are going to want to divide the data into two specific sets: training and validation sets. Then, the training set to train the model, while the validation set is used to evaluate its results

- Select a Model: As previously stated, you will need to select a stable diffusion model from the options we have previously mentioned.

- Train It: This process is where the training begins for your Stabel Diffusion model. Indeed, software like PyTorch or TensorFlow can be used during this step. Conversely, it is important to know the time frame that will be in play. Such training could range in length, depending on the size of the dataset provided.

- Evaluation: After it has been trained, you will need to evaluate the performance of the model. This is the part of the process in which the validation set becomes critical. Use it within this step of the training process.

- Generate Images: Finally, you get to see the fruits of all of your work alongside your mode. When you are satisfied with its overall performance, feed the model random noise through a random noise vector and generate some images.

Things to Remember

Before we leave you to generate to your heart’s content, let’s talk about some things to remember. First, you have to use high-quality data. When it comes to training, the quality of your resources will have a massive impact on the overall effectiveness of the model.

Additionally, remember to use different hyperparameters. This will affect the model’s way of using the data in its learning. Finally, ensure that your training setup is good and that you monitor the progress your model is making along the way.